MapReduce represents a pattern that had a huge impact on the data analysis and big data community. Apache Hadoop allows to scatter and scale data processing with the number of nodes and cores.

One of the many corner points in this full framework is that code is shipped and executed on-site where the data resides. Next, only a pre-processed transformed version (map) of the data is then shuffled and sorted to the aggregators on different executors via the network.

MapReduce is hard to use on its own, so it usually is deployed with

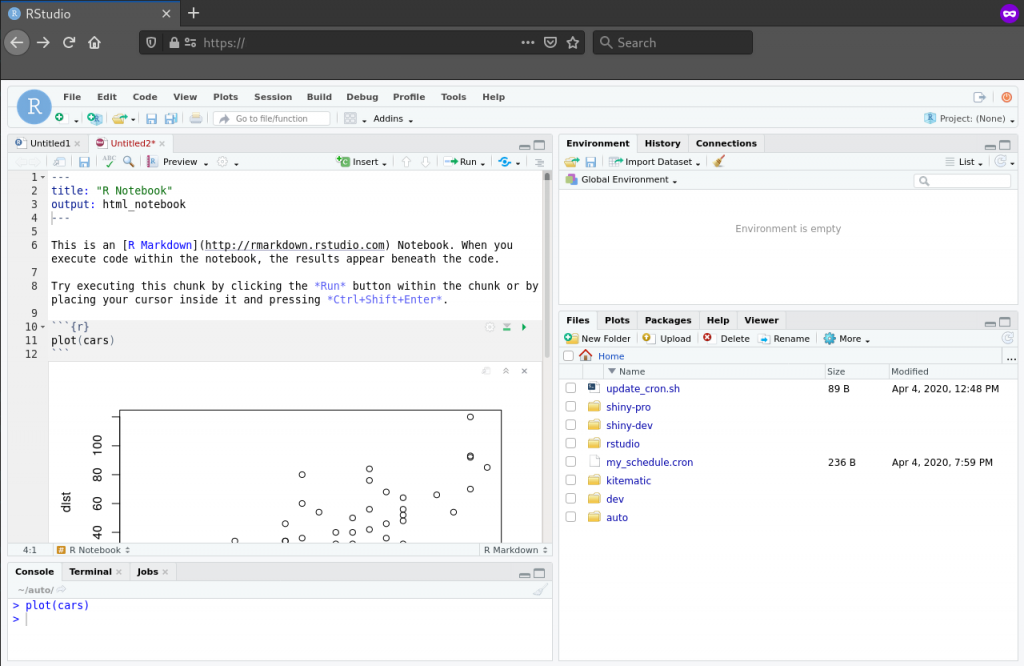

Apache Hadoop or Apache Spark. To play around with it without either one of those large frameworks, I created one in Python – MapReduceSlim. It emulates all core features of the MapReduce. It has one difference, it loads each line of the files separately into the map function. In the case of Apache Hadoop, it would be block-wise. This provides a nice solution to understand the behavior and the pattern of MapReduce and how to implement a mapper and reducer.

Classic WordCount Example

Mapper function

# Hint: in MapReduce with Hadoop Streaming the

# input comes from standard input STDIN

def wc_mapper(key: str, values: str):

# remove leading and trailing whitespaces

line = values.strip()

# split the line into words

words = line.split()

for word in words:

# write the results to standard

# output STDOUT

yield word, 1

Reducer function

def wc_reducer(key: str, values: list):

current_count = 0

word = key

for value in values:

current_count += value

yield word, current_count

Finally, call the function with the MapReduceSlim framework

# Import the slim framework

from map_reduce_slim import MapReduceSlim, wc_mapper, wc_reduce

### One input file version

# Read the content from one file and use the

# content as input for the run.

MapReduceSlim('davinci.txt', 'davinci_wc_result_one_file.txt', wc_mapper, wc_reducer)

### Directory input version

# Read all files in the given directory and

# use the content as input for the run.

MapReduceSlim('davinci_split', 'davinci_wc_result_multiple_file.txt', wc_mapper, wc_reducer)